Centralized Intelligence for Dynamic Swarm Navigation

The Problem Statement: Multi-Robot Navigation System for Dynamic Industrial Environments

To design a system for a centralized Intelligence based path planning & navigation in a dynamic condition like a warehouse with moving instruments, forklifts & humans

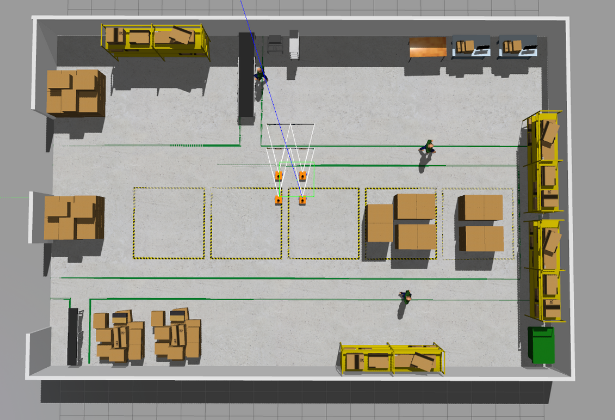

Swarm Robots in Warehouse Simulation

Swarm Robots in Warehouse Simulation

Background

Bharat Forge Ltd., a flagship company of the Kalyani Group, is a global leader in precision engineering and advanced manufacturing 🏭. With complex factory and warehouse environments that are constantly evolving, their need was clear:

How can autonomous robots navigate and perform tasks efficiently in dynamic indoor environments — without GPS, markers, or manual intervention?

The Inter-IIT Tech Meet 2025 problem statement challenged us to engineer a singular brain for a swarm of autonomous mobile robots (AMRs) to navigate and complete tasks in such unpredictable spaces.

Our Approach

We designed a ROS2-based multi-agent system backed by a shared NoSQL database, capable of:

- Real-time obstacle avoidance using DWA,

- Obstacle labelling with computer vision,

- Memory persistence and map sharing,

- Scalable task assignment and navigation, and

Our focus: scalability, autonomy, and continuous learning.

System Architecture

Core Components 🔁

- ROS 2 Humble: Middleware for multibot control and communication.

- Gazebo: Simulated a 10x10m+ industrial workspace with dynamic and static objects.

- MongoDB: A shared NoSQL database for map persistence and real-time logging.

- YOLOv8: Real-time object detection of obstacles, tools, and dynamic agents.

- Reinforcement Learning (RL): Intelligent path planning with dynamic prioritization.

Multi-Bot Simulation Setup 📦

We created a custom Gazebo environment with:

- Minimum 4 autonomous robots (Differential Drive + Quadruped variants),

- 3+ dynamic obstacles (humans, machines),

- 10+ objects (tools, extinguishers, parts),

- Random spawning logic for scalability testing.

Each robot published its position, task state, and sensory feedback via ROS2 topics to be logged in MongoDB.

Object Detection & Environment Mapping 🧭

To reduce reliance on markers, we used YOLOv8, fine-tuned on:

- Fire extinguishers

- Toolboxes

- Human workers

- Warehouse equipment

YOLO detections were timestamped and stored in MongoDB as

object_id,type,position, andvelocity.

These updates fed into a real-time map broadcasted using ROS2 for all swarm agents to reference.

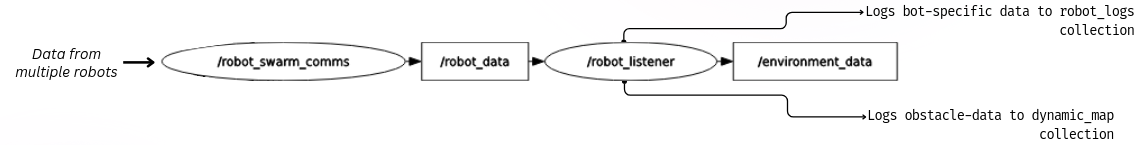

Shared Database & Persistent Memory 📡

Custom collections made in Mongodb server

Custom collections made in Mongodb server

We implemented a robust logging system using MongoDB making two collections:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

{

"robot_logs": {

"timestamp": "2024-11-05T15:00:00Z",

"robot_id": "bot_1",

"X": 3.1, "Y": 7.2,

"obstacles": [...],

"confidence_score": "confidence_score",

"scan_ranges": "scan_ranges",

"tasks_done": "navigating"

},

"dynamic_map": {

"environment_id": "sector_2",

"map_data": [...],

"last_updated": "2024-11-05T14:58:10Z",

}

}

This enabled task resumption and dynamic path re-evaluation even after failure or system restarts.

Task Ranking & Autonomous Scheduling 📋

Every robot fetched tasks from a central MongoDB tasks collection, ranked by: Estimated time to reach, Object priority (e.g., emergency tools > inspection points), Current robot availability.

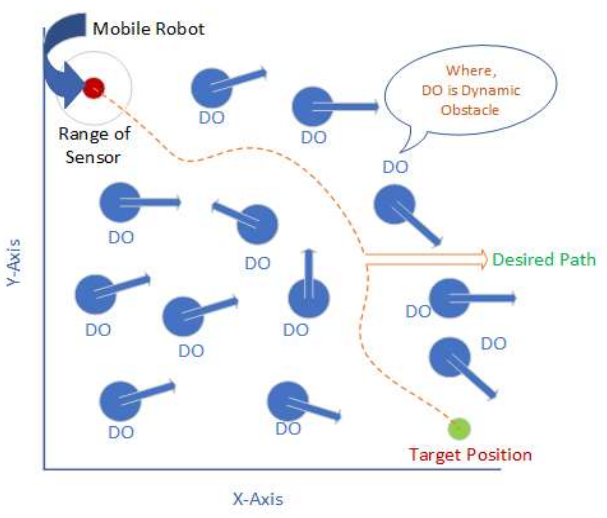

We used a RL based technique(PPO with DWA) and updated task assignments in real-time, ensuring optimal efficiency across the swarm. This method was inspired from a research paper.

Dynamic Obstacle avoidance using RL from research paper

Dynamic Obstacle avoidance using RL from research paper

Simulation Results 🎥

We showcased 4 key scenarios in Gazebo:

- Scalability in Larger Environments – 20x20m maps with high clutter.

- Swarm Size Flexibility – 8+ bots navigating collaboratively.

- Dynamic Obstacle Handling – Human avatars moving unpredictably.

- Map Regeneration & Persistence – Reloading environments from stored maps.

Video demos and performance logs were uploaded to the GitHub repository.

- AI Models Behind the Scenes

- YOLOv8: Custom-trained for object detection; inference integrated into ROS2 node.

- RL Agent: Trained in simulated environments to learn safe navigation patterns in presence of moving agents.

- Costmap Layer: ROS2 Nav2’s layered costmap used to merge YOLO data with LiDAR and odometry for real-time updates.

Key Outcomes 📈

Feature Status

- Multi-Robot Coordination ✅ Implemented

- Dynamic Obstacle Avoidance ✅ Integrated

- YOLO-based Object Detection ✅ Trained

- Scalability Tests ✅ Passed

🔗 GitHub and Demo

GitHub Repo: m2_Kalyani_BharatForge_44

Solving Bharat Forge’s Inter-IIT challenge gave us deep insights into how modern robotics, AI, and scalable software architecture can come together to transform industries. Our solution not only achieves markerless indoor autonomy but lays the foundation for factory-wide AMR coordination at scale.

Made by the Robotics Team #2 of IIT Bhilai

Powered by ROS 2, MongoDB and YOLOv8